本文由 发布,转载请注明出处,如有问题请联系我们! 发布时间: 2021-06-12【注意力机制】Attention Augmented Convolutional Networks

加载中【注意力机制】Attention Augmented Convolutional Networks

注意力机制之Attention Augmented Convolutional Networks

初始连接:https://www.yuque.com/lart/papers/aaconv

具体内容

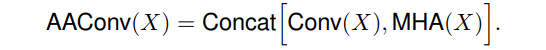

We propose to augment convolutional operators with this self-attention mechanism by concatenating convolutional feature maps with a set of feature maps produced via self-attention.

关键工作中

最先掌握卷积和实际操作自身二点特点:

- 可逆性:locality via a limited receptive field

- 等转性:translation equivariance via weight sharing

- The Convolution Operator is Translation Equivariant meaning it preserves Translations however the CNN processing allows for Translation Invariance which is achieved by means of a proper (i.e. related to spatial features) dimensionality reduction. https://aboveintelligent.com/ml-cnn-translation-equivariance-and-invariance-da12e8ab7049

- While convolutions are translation equivariant and not invariant, an approximative translation invariance can be achieved in neural networks by combining convolutions with spatial pooling operators. https://chriswolfvision.medium.com/what-is-translation-equivariance-and-why-do-we-use-convolutions-to-get-it-6f18139d4c59

虽然这种特性被证实了是设计方案在图象上实际操作的实体模型时尤为重要的梳理参考点(inductive biase). 可是卷积和的部分特性(the local nature of the convolutional kernel)阻拦了其捕捉全局性的前后文信息内容(global context), 而这种信息内容针对图像识别技术是很必需的. 它是卷积和的关键的缺点. (convolution operator is limited by its locality and lack of understandingof global contexts)

而在捕捉远距离互动关联(long range interaction)上, 近期的Self-attention主要表现的很非常好(has emerged as a recent advance). 自专注力身后的重要观念是转化成从掩藏模块测算的值的加权平均. 有别于卷积和实际操作或是池化实际操作, 这种权重值是动态性的依据键入特点, 根据掩藏模块中间的相似度涵数造成的(produced dynamically via a similarity function between hidden units). 因而键入数据信号中间的互动取决于数据信号自身, 而不是像在卷积和中, 被事先由她们的相对位置而决策.

因此文中试着将自专注力测算运用到卷积和实际操作中, 来完成远距离互动. 在辨别性视觉效果每日任务(discriminative visual tasks)中, 考虑到应用自专注力更换一般的卷积和. 引进a novel two-dimensional relative self-attention mechanism, 其在引入(being infused with)相对性位置信息的另外能够维持translation equivariance, 使其特别适合图象.

在替代卷积和做为单独测算模块层面被证实是有竞争能力的. 可是必须留意的是, 在操纵试验中发觉, 将自专注力和卷积和组成起來的状况能够得到最好是的結果. 因而并沒有彻底抛下卷积和, 只是明确提出应用self-attention mechanism来提高卷积和(augment convolutions), 将要注重可逆性的卷积和特点图和根据self-attention造成的可以模型更远距离依靠(capable of modeling longer range dependencies)的特点图拼凑来得到最后結果.

在好几个试验中, 专注力提高卷积和都完成了一致的提高, 此外针对彻底的自留意实体模型(无需卷积和那一部分), 这能够当作是专注力提高实体模型的一种特殊情况, 在ImageNet上仅比他们的彻底卷积和构造略差, 这说明自留意体制是一种用以图像分类的强劲单独的测算原语(a powerful standalone computational primitive).

有关primitive这一定义, 找到一段表述: 疏忽就是指全部系统软件中最基本上的定义.

https://stackoverflow.com/a/8022435

For me, it means something that cannot be decomposed (people use also the atomic word sometimes in that sense, but atomic is often also used for explanation on concurrency or parallelism with a different meaning).

For instance, on Unix (or Linux) the system calls, as seen by the application are primitive or atomic, they either happen or not (sometimes, they got interrupted and give an EINTR or ERESTART error).

And inside an interpreter, or even in the formal specification, of a language, the primitive are those operations which you cannot define, and which the interpreter deals with specially. Very often, cons is a primitive operation for Lisp dialects.

这儿提及了别的的一些visual tasks中的专注力的工作中:

- reweigh feature channels using signals aggregated from entire feature maps

- Squeezeand-Excitation [SENet]

- Gather-Excite [http://papers.nips.cc/paper/8151-gather-excite-exploiting-feature-context-in-convolutional-neural-networks.pdf]

- refine convolutional features independently in the channel and spatial dimensions

- BAM [Bam: bottleneck attention module]

- CBAM [Cbam: Convolutional block attention module]

- the additive use of a few non-local residual blocks that employ self-attention in convolutional architectures

- non-local neural networks

相对性于目前的方式, 这儿要明确提出的构造不依赖于相匹配的(counterparts)彻底卷积和实体模型的预训炼, 只是全部互联网都应用了self-attention mechanism. 此外multi-head attention的应用促使实体模型另外关心室内空间向量空间和特点向量空间. (双头专注力便是将特点划顺着安全通道区划为不一样的组, 不一样同组开展独立的转换, 能够得到更为多元化的特点表述)

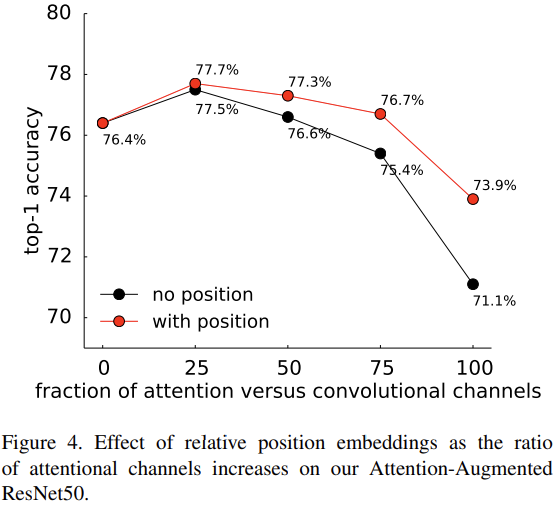

此外, 为了更好地提高图象上的自专注力的语言表达能力, 这儿拓展[Selfattention with relative position representations, Music transformer]中的相对性自专注力到二维方式, 这促使能够而有标准(in a principled way)地仿真模拟移动等转性(translation equivariance).

那样的构造能够立即造成附加的特点图, 而不是根据加减法(可能是加法)[Non-local neural networks, Self-attention generative adversarial networks]或自动门[Squeeze-and-excitation networks, Gather-excite: Exploiting feature context in convolutional neural networks, Bam: bottleneck attention module, Cbam: Convolutional block attention module]再次校正卷积和特点. 这一特点容许灵便地调节专注力安全通道的占比, 考虑到从彻底卷积和到彻底留意实体模型的一系列构架(a spectrum of architectures, ranging from fully convolutional to fully attentional models).

关键构造

- H, W, Fin: 键入特点图的height, weight, 安全通道数

- Nh, dv, dk:heads的总数, values的深层(也就是特点图安全通道数), queries和keys的深层(这好多个主要参数全是MHA, multi-head attention的一些主要参数), 这里有规定, dv和dk务必能够被Nh整除, 这儿应用dhv和dhk来做为每一个head平均值的深层和查看/键的深层

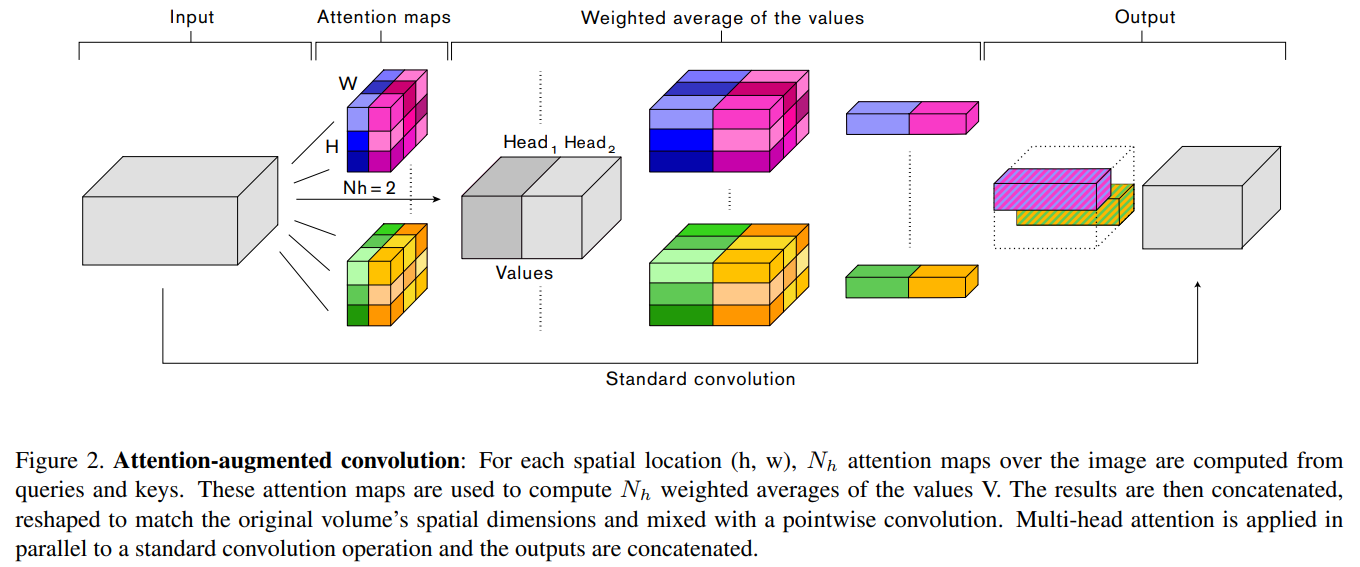

图象数据信息双头专注力的测算

双头的测算方式

双头是由双头拼凑而成

in_tensor\((H,W,F_{in})\) =(flatten)=>X\((HW,F_{in})\)(We omit the batch dimension for simplicity.)- 依照transformer构造清算双头专注力

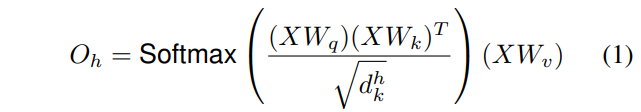

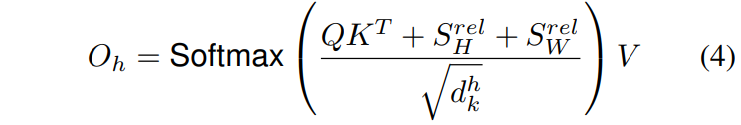

- 针对head h相匹配的自专注力結果为算式1所显示, 这儿的\(W_q\)/\(W_k\)/\(W_v\)各自样子为\((F_{in}, d^h_q)/(F_{in}, d^h_k)/(F_{in}, d^h_v)\), 各自用以投射键入X到查看\(Q=XW_q\) 、键\(K=XW_k\) 合值\(V=XW_v\) , 各自的样子为\((HW, d^h_q)/(HW, d^h_k)/(HW, d^h_v)\)

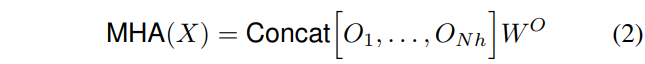

- 全部head的輸出拼凑到一起, 随后依照算式2开展解决, 这儿的\(W^O \in \mathbb{R}^{d_v \times d_v}\)(能够了解, 这儿的\(N_h\)个\(O\)的拼凑, 事实上深层为\(d_v\), 也就是\(d_v=N_h \times d^h_v\)), 这儿MHA测算后会调节样子为\((H, W, d_v)\)来配对初始的空间和时间

- multi-head attention

- 测算复杂性:\(O((HW)^2d_k)\)(这儿只必须考虑到大部分\((XW_q)(XW_k)^T\)的测算)

- 空间复杂度:\(O((HW)^2N_h)\)(这儿包括了Nh块头的結果)

二维部位置入Two-dimensional Positional Embeddings

这儿的"二维"事实上是相对性于初始对于语言表达的一维信息内容的构造来讲, 这儿键入的是二维图象数据信息.

因为沒有显式的位置信息的运用, 因此自专注力达到交换律:\(MHA(\pi(X))=\pi(MHA(X))\), 这儿的\(\pi\)表明针对清晰度部位的随意换置. 这体现出去self-attention具备 permutation equivariant. 那样的特性促使针对仿真模拟高宽比结构型的数据信息(比如图象)来讲, 并不是很合理.

好几个应用显式的空间数据来提高激话图的部位编号早已被明确提出来解决有关的难题:

- Image Transformer extends the sinusoidal waves first introduced in the original Transformer to 2 dimensional inputs.

- CoordConv concatenates positional channels to an activation map.

在文章内容的试验中发觉, 在图像分类和目标检测上, 这种编码方式并不太好用, 创作者们将其归功于尽管这种对策能够摆脱换置等转性, 可是却不可以确保图象每日任务必须的移动等转性(permutation equivariant(换置等转性), translation equivariance(移动等转性)). 因此, 这儿拓展了目前的相对位置编号[Self attention with relative position representations]到二维上, 而且根据Music Transformer明确提出一个运行内存合理的完成.

相对位置置入Relative positional embeddings

Introduced in [Self attention with relative position representations] for the purpose of language modeling, relative self-attention augments self-attention with relative position encodings and enables translation equivariance while preventing permutation equivariance.

这儿根据单独加上相对性的宽和相对性的高的信息内容, 来完成二维相对性自专注力.

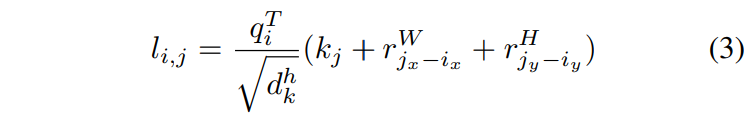

针对清晰度\(i=(i_x, i_y)\)有关清晰度\(j=(j_x, j_y)\)的attention logit测算方法以下(The attention logit for how much pixel i attends to pixel j is computed as):

- \(q_i\)表明 部位为\(i\) 的query vector, 也就是Q中的一个长为\(d^h_k\)的矢量素材原素.

- \(k_j\)表明 部位为\(j\) 的key vector, 也就是K中的一个长为\(d^h_k\)的矢量素材原素.

- \(r^W_{j_x-i_x}\)和\(r^H_{j_y-i_y}\)表明针对相对性总宽\(j_x-i_x\)和绝对高度\(j_y-i_y\)学习培训到的置入表明, 分别均为dhk长短的矢量素材.

- \(r\)相匹配的相对位置主要参数引流矩阵\(r^W\)和\(r^H\)分别是\((2W-1, d^h_k)\)和\((2H-1, d^h_k)\)尺寸的.

单独头h的輸出变成了:

这儿的2个\(S\)全是\(HW \times HW\)的引流矩阵, 表明顺着宽高维度的相对位置logits

由于考虑到相对性高宽信息内容, 因此达到\(S^{rel}_W[i, j]=S^{rel}_W[i, j W]\),\(S^{rel}_H[i, j]=S^{rel}_H[i, j H]\). 那样就不用为全部的(i, j)对测算logits了, 这儿能够依照那样来了解(这是我自身的了解): 针对二维引流矩阵, 依照顺着个人行为W方位(横着), 也就是x方位, 顺着列入H方位(竖向)即y向, 针对随意一点\(j\)和固定不动的点\(i\):

- SW中有\((j_x-i_x)\%W=[(j nW)_x-i_x]\%W\), 即依照行主序向后挪动个部位, 仍坐落于同一列;

- SH中有\((j_y-i_y)\%H=[(j nH)_y-i_x]\%H\), 即依照列主序向后挪动\(nH\)个部位, 仍然在同一行.

这儿的相对性专注力的方式事实上有别于初始参考论文Self attention with relative position representations中具备内存占用为\(O((HW)^2d^h_k)\)(相对性置入\(r_{ij} \in \mathbb{R}^{HW \times HW \times d^h_k}\))的设计方案, 只是根据MUSIC TRANSFORMER中明确提出的memory efficient relative masked attention algorithm的一种3D拓展, 拓展为了更好地unmasked relative self-attention over 2 dimensional inputs上, 进而储存耗费变成了\(O(HWd^h_k)\)(相对位置置入\(r_{ij}\)被拆分为2个一部分, 即\(r^H \in \mathbb{R}^{(2H-1) \times d^h_k}, r^W \in \mathbb{R}^{(2W-1 )\times d^h_k}\), 而且跨头不跨层的方式开展共享资源). 针对各层, 事实上只必须加上附加的\((2(H W) − 2)d^h_k\)个主要参数来模型顺着高和宽的相对性间距就可以.

Attention Augmented Convolution

文章内容明确提出的应用专注力提高的卷积和关键的优点:

- use an attention mechanism that can attend jointly to spatial and feature subspaces (each head corresponding to a feature subspace)

- introduce additional feature maps rather than refining them

AAConv的关键全过程:

Similarly to the convolution, the proposed attention augmented convolution

- is equivariant to translation

- can readily operate on inputs of different spatial dimensions

下面对比一般的卷积和\((F_{out}, F_{in}, k, k)\)剖析了AAConv的参总数:

- 设定\(v=\frac{d_v}{F_{out}}\)做为MHA一部分的总輸出安全通道数与总的AAConv輸出安全通道数的比率;

- 设定\(\kappa = \frac{d_k}{F_{out}}\)做为MHA中Key的深层与总的AAConv輸出安全通道数的比率.

- 应用\(1 \times 1\)卷积和来线性变换获得Q\K\V, 因此有参总数\((d_v d_k d_q)F_{in} = (2d_k d_v)F_{in}=(v 2\kappa)F_{out}F_{in}\)

- 应用一个附加的\(1\times1\)卷积和用以混和好几个头的奉献(mix the contribution of different heads), 这一部分参总数为\(d_vd_v=(vF_{out})^2\);

- 除开专注力一部分, 也有一部分规范卷积和, 即前边算式中的

Conv, 其参总数为:\(k^2(F_{out} - d_v)F_{in} = k^2(1 - v)F_{out}F_{in}\); - 因此, 忽视了相对位置置入和卷积和参考点以后, 总体的构造的参总数约为:\(F_{in}F_{out}(2\kappa v v^2\frac{F_{out}}{F_{in}} k^2-k^2v)=F_{in}F_{out}(2\kappa v(1-k^2) k^2 v^2\frac{F_{out}}{F_{in}})\)

- 总体相对性于卷积和的主要参数的变化量为\(\Delta_{params}\sim F_{in}F_{out}(2\kappa v(1-k^2) v^2\frac{F_{out}}{F_{in}})\), 因此更换3x3卷积和时, 会轻度降低参总数, 而更换1x1卷积和时, 则会产生轻度的提升.

Attention Augmented Convolutional Architectures

- 全部试验中, AAConv后都是会跟随BN来缩放卷积层和专注力层特点图的共享资源.

- 每一个方差块应用一次AAConv.

- 因为QK的結果具备很大的内存占用, 因此是依照从深到浅的次序应用, 直至做到运行内存限制.

- To reduce the memory footprint of augmented networks, we typically resort to a smaller batch size and sometimes additionally downsample the inputs to self-attention in the layers with the largest spatial dimensions where it is applied(这儿指的应该是在专注力测算前后左右各自下采样和上采样). Downsampling is performed by applying 3x3 average pooling with stride 2 while the following upsampling (requiredfor the concatenation) is obtained via bilinear interpolation.

试验結果

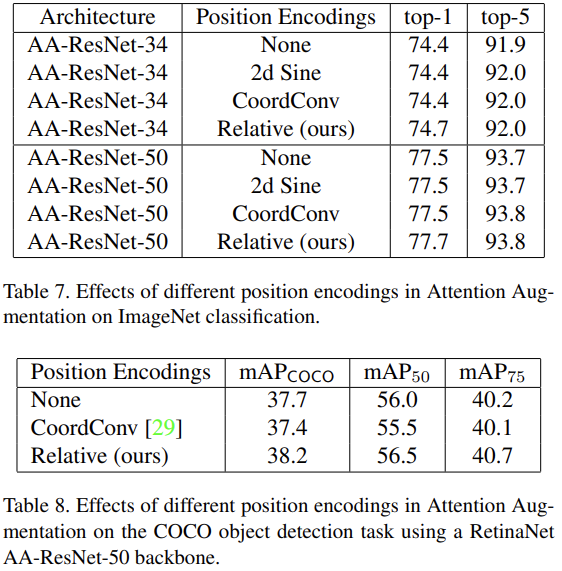

部位编号

- the position-unaware version of self-attention (referred to as None),

- a two-dimensional implementation of the sinusoidal positional waves (referred to as 2d Sine) as used in [32],

- CoordConv [29] for which we concatenate (x, y, r) coordinate channels to the inputs of the attention function,

- our proposed two-dimensional relative position encodings (referred to as Relative).

将来的探寻

- Several open questions from this work remain. In future work, we will focus on the fully attentional regime and explore how different attention mechanisms trade off computational efficiency versus representational power. For instance, identifying a local attention mechanism may result in an efficient and scalable computational mechanism that could prevent the need for downsampling with average pooling [Stand-aloneself-attention in vision models].

- Additionally, it is plausible that architectural design choices that are well suited when exclusively relying on convolutions are suboptimal when using self-attention mechanisms. As such, it would be interesting to see if using Attention Augmentation as a primitive in automated architecture search procedures proves useful to find even better models than those previously found in image classification [55], object detection [12], image segmentation [6] and other domains [5, 1, 35, 8].

- Finally, one can ask to which degree fully attentional models can replace convolutional networks for visual tasks.

编码实例

参考创作者毕业论文中的tensorflow完成, 我应用pytorch改了下.

import torch

from einops import rearrange

from torch import nn

def rel_to_abs(x):

"""

Converts tensor from relative to aboslute indexing.

Details can be found at: https://www.yuque.com/lart/ugkv9f/oazsec

:param x: B Nh L 2L-1

:return: B Nh L L

"""

B, Nh, L, _ = x.shape

# Pad to shift from relative to absolute indexing.

col_pad = torch.zeros(B, Nh, L, 1)

x = torch.cat([x, col_pad], dim=3)

flat_x = x.reshape(B, Nh, L * 2 * L)

flat_pad = torch.zeros(B, Nh, L - 1)

flat_x = torch.cat([flat_x, flat_pad], dim=2)

# Reshape and slice out the padded elements.

final_x = flat_x.reshape(B, Nh, L 1, 2 * L - 1)

final_x = final_x[:, :, :L, L - 1:]

return final_x

def relative_logits_1d(x, rel_k):

"""

Compute relative logits along one dimenion.

:param x: B Nh Hd L

:param rel_k: 2L-1 Hd

"""

rel_logits = torch.einsum("bndl, rd -> bnlr", x, rel_k)

rel_logits = rel_to_abs(rel_logits) # B Nh L 2L-1 -> B Nh L L

return rel_logits

class RelativePosEmbedding(nn.Module):

"""

Compute relative_logits.

For ease, we 1) transpose height and width, 2) repeat the above steps and 3) transpose to eventually

put the logits in their right positions.

"""

def __init__(self, h, w, dim):

super(RelativePosEmbedding, self).__init__()

self.h = h

self.w = w

self.rel_emb_w = torch.randn(2 * w - 1, dim)

nn.init.normal_(self.rel_emb_w, dim ** -0.5)

self.rel_emb_h = torch.randn(2 * h - 1, dim)

nn.init.normal_(self.rel_emb_h, dim ** -0.5)

def forward(self, x):

"""

:param x: B Nh Hd HW

:return: B Nh HW HW

"""

Nh = x.shape[1]

# Relative logits in width dimension first.

rel_logits_w = relative_logits_1d(

rearrange(x, "b nh hd (h w) -> b (nh h) hd w", h=self.h, w=self.w), self.rel_emb_w

)

rel_logits_w = rearrange(rel_logits_w, "b (nh h) w0 w1 -> b nh h () w0 w1", nh=Nh)

# Relative logits in height dimension next.

rel_logits_h = relative_logits_1d(

rearrange(x, "b nh hd (h w) -> b (nh w) hd h", h=self.h, w=self.w), self.rel_emb_h

)

rel_logits_h = rearrange(rel_logits_h, "b (nh w) h0 h1 -> b nh h0 h1 w ()", nh=Nh)

return rearrange(rel_logits_h rel_logits_w, "b nh h0 h1 w0 w1 -> b nh (h0 w0) (h1 w1)")

class AbsolutePosEmbedding(nn.Module):

"""

Given query q of shape [batch heads tokens dim] we multiply

q by all the flattened absolute differences between tokens.

Learned embedding representations are shared across heads

"""

def __init__(self, h, w, dim):

super().__init__()

scale = dim ** -0.5

self.abs_pos_emb = nn.Parameter(torch.randn(h * w, dim) * scale)

nn.init.normal_(self.abs_pos_emb, scale)

def forward(self, x):

"""

:param x: B Nh Hd HW

:return: B Nh HW HW

"""

return torch.einsum("bndx, yd -> bhxy", x, self.abs_pos_emb)

class SelfAttention3D(nn.Module):

def __init__(self, in_dim, key_dim, value_dim, nh, hw, pos_mode="relative"):

super(SelfAttention3D, self).__init__()

self.dkh = key_dim // nh

self.dvh = value_dim // nh

self.nh = nh

self.key_dim = key_dim

self.value_dim = value_dim

self.kqv_proj = nn.Conv2d(in_dim, 2 * key_dim value_dim, 1)

self.out_proj = nn.Conv2d(value_dim, value_dim, 1)

if pos_mode == "relative":

self.position_embedding = RelativePosEmbedding(h=hw[0], w=hw[1], dim=self.dkh)

elif pos_mode == "absolute":

self.position_embedding = AbsolutePosEmbedding(h=hw[0], w=hw[1], dim=self.dkh)

else:

self.position_embedding = nn.Identity()

def split_heads_and_flatten(self, _x):

return rearrange(_x, "b (nh hd) h w -> b nh hd (h w)", nh=self.nh)

def forward(self, x):

"""

:param x: B C H W

"""

# Compute q, k, v

k, q, v = self.kqv_proj(x).split([self.key_dim, self.key_dim, self.value_dim], dim=1)

q = q * self.dkh ** -0.5 # scaled dot-product

# After splitting, shape is [B, Nh, dkh or dvh, HW]

q, k, v = map(self.split_heads_and_flatten, (q, k, v))

# [B, Nh, HW, HW]

logits = torch.einsum("bndx, bndy -> bnxy", q, k)

logits = self.position_embedding(q)

weights = logits.softmax(-1)

attn_out = torch.einsum("bnxy, bndy -> bndx", weights, v)

attn_out = rearrange(attn_out, "b nd hd (h w) -> b (nd hd) h w", h=x.shape[2], w=x.shape[3])

# Project heads attn_out = self.out_proj(attn_out)

return attn_out

class AugmentedConv2d(nn.Module):

def __init__(self, in_dim, out_dim, kernel_size, key_dim, value_dim, num_heads, hw, pos_mode):

super(AugmentedConv2d, self).__init__()

self.std_conv = nn.Conv2d(in_dim, out_dim - value_dim, kernel_size, padding=kernel_size // 2)

self.attention = SelfAttention3D(

in_dim, key_dim=key_dim, value_dim=value_dim, nh=num_heads, hw=hw, pos_mode=pos_mode

)

def forward(self, x):

conv_out = self.std_conv(x)

attn_out = self.attention(x)

return torch.cat([conv_out, attn_out], dim=1)

if __name__ == "__main__":

m = AugmentedConv2d(

in_dim=4, out_dim=64, kernel_size=3, key_dim=32, value_dim=48, num_heads=2, hw=(10, 10), pos_mode="relative"

)

print(m(torch.randn(4, 4, 10, 10)).shape)

一些疑虑

- permutation equivariance(换置等转性), translation equivariance(移动等转性)二者的差别是啥?

填补专业知识

针对self-attention包括三个键入, query Q/key K/value V, 三者实际表明的含意是什么呢? 以下几点节选自https://www.cnblogs.com/rosyYY/p/10115424.html:

- Q、K、V中包括的全是原始记录的置入表明

- Q为什么叫query?

- 是由于每一次必须拿一个置入表明去"查看"其和随意的置入表明中间的match水平, 也就是attention尺寸

- K和V表明键值, 有关这儿的表述, 各个地方都不足为据, 在

从Seq2seq到Attention实体模型到Self Attention(二) - 量化投资深度学习的文章内容 - 知乎问答 https://zhuanlan.zhihu.com/p/47470866中有处提及:"key、value的发源毕业论文 Key-Value Memory Networks for Directly Reading Documents. 在NLP的行业中, Key, Value通常便是偏向同一个文本隐空间向量(word embedding vector)". 姑且做太多表述.

有关连接

- 毕业论文:https://arxiv.org/pdf/1904.09925.pdf

- 编码:https://GitHub.com/leaderj1001/Attention-Augmented-Conv2d

- 分析:https://www.jiqizhixin.com/articles/2019-04-26-7

- multi-head attention:https://www.cnblogs.com/rosyYY/p/10115424.html

- 从Seq2seq到Attention实体模型到Self Attention(二) - 量化投资深度学习的文章内容 - 知乎问答 https://zhuanlan.zhihu.com/p/47470866

- The Illustrated Transformer:https://jalammar.github.io/illustrated-transformer/

- 汉语翻译:https://blog.csdn.net/qq_42208267/article/details/84967446

- 自然语言理解解决中的自注意力机制(Self-attention Mechanism):https://www.cnblogs.com/robert-dlut/p/8638283.html

- https://kexue.fm/archives/4765